Hello folks,

I guess I never shared it but recently I was into researching and finding out about AI, especially Neural Networks.

Firstly inspired by Creatures (watch this video: https://www.youtube.com/watch?v=Y-6DzI-krUQ&t=372s) I came up with the idea of creating a Neural Network plus environment. Starting point was this implementation https://github.com/NMZivkovic/SimpleNeuralNetworkInCSharp.

Creatures AI

There are plenty of sources for detailed knowledge about how Creatures worked, for a very good reference I used

- https://creatures.fandom.com/wiki/Concept_lobe

- http://double.nz/creatures/brainlobes/lobes.htm#lobe7

First link is not very detailed but giving a good overview while as Mr Double in the second link clearly understands how Creatures works. On the other hand side, the interesting parts around the concept lobe are missing and the internet is not full of this kind of information in the year 2023 / I was too lazy to look it up yet.

At the very high level summary, the Agents in the Computer Game Creatures use a simplified four-layer neural network which is sized and arranged due to the limitations of the past time when the game has been created. However, the intelligent approach to these shortcomings provided a still very detailed and yet complex to understand ecosystem, even more when several people are forced to re-engineer it.

Neural Networks

A neural network is basically a system of computer objects re-enacting a brain-like structure. In a brain, Neuron Cells are interconnected with Synapses, this is also simulated with a neural network. Each Neuron is doing basically checking the input on a given function and then signalling 0 or 1 on the outputs, activated or not activated.

graph LR

A[Neuron] --> B[Neuron]

B --> C[Neuron]

D[Neuron] --> B

A --> E[Neuron]

A --> F[Neuron]

D --> G[Neuron]

E --> H[Neuron]

E --> C

F --> H

G --> CWhat looks a bit chaotic is basically a three-layer neural network. On the left hand side you see the two input neurons, and on the right hand side you see two output neurons. Everything in between is called 'hidden layer(s)', in this case one.

The input neurons are linked to input variables for the neural network, like

- own health

- hunger

- need for sleep

, while the output neurons are the result of the decision making process, like - heal yourself

- eat

- go to bed

, and the likes.

In the Creatures case, some input neurons are wired to e.g. objects, and if the Agent approaches an object, the specific neuron is triggered. When the decision making process is done, a possible reault for the output neurons could be 'take object', as it turns out to be interesting or necessary or just because the agent got positive feedback the last time it did that.

In my personal opinion, AI and its environment need to be split into their usage and less into technology. I tested some models which follow a strict line, which in short, can be reduced to a flow of three steps:

graph LR

A[Input] --> B[Decision]

B --> C[Action]An AI controlled entity needs to be able to receive and analyse input (e.g. positions, world, own parameters like health), needs to take a decision based on those and then start an action (shoot, start a certain behaviour like follow, etc). Then an approximate simulation of entities can be approached.

With all the Neural Network Hype(tm) I asked myself, where can I find those in the above equation? To me, they take the decision part, which also could be covered by other decision mechanisms reaching from simple tree structures to more complex ones.

After researching in the Creature AI though, I saw that there is some potential to the Learning Idea here and how to incorporate more elements "easier". Plus, give the lifelike effect of reacting different in twice the same situation (because the agent learned something / forgot something).

So I went on and with above tools / implementations created my own interpretation of a generated Neural Network following loosely on what I heard about Creatures.

After the first implementation was done, basically you start to input values and receive values. After researching the topic of Neural Network Visualization, there are some interesting approaches but I wanted to see the "brain", as it is called in Creatures.

More on the actual implementation after some refinements have been done.

Visualization in WPF / NET7

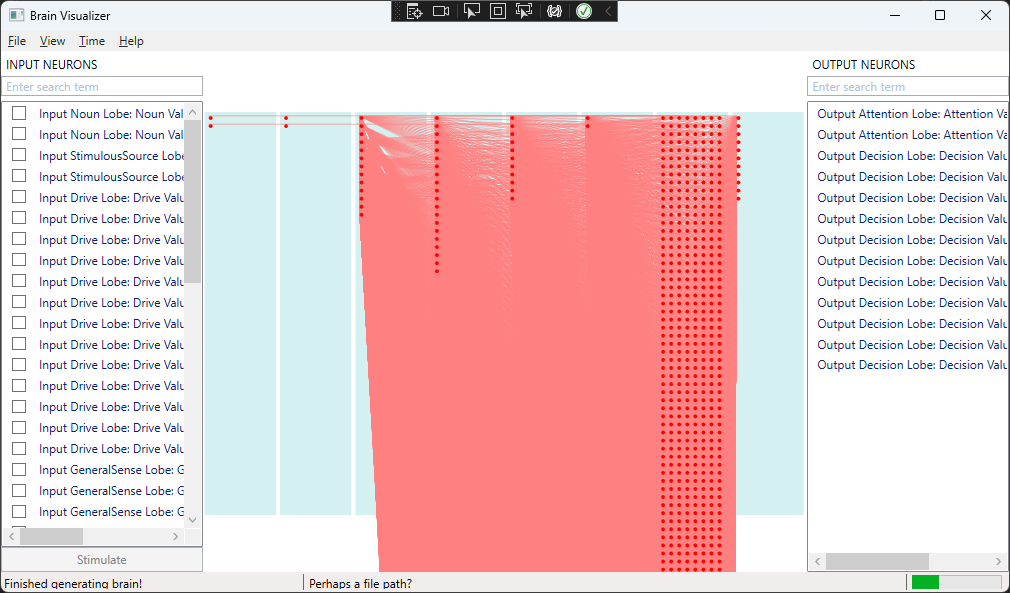

Turns out, that the visualisation of this is taking minimum the equal time as the Neural Network itself. Due to my previous experience, I went down the NET7 but WPF road (MAUI does not make too much sense in terms of application complexity). On the Neural Network side, I generated a step-based content generation lib supporting for example a setup close to creatures, but not (yet) including the finer details (therefore resulting in an unconstrained and therefore BIG concept lobe):

Not only does the generation of such a relatively small Neural Network take some time (no performance tweaks applied) but still we might see a rather unnecessary high number of Neurons in the Concept Lobe (what can the AI do, basically). This will be reduced soonish.

Very interesting is also the input and output of such AI creations. In Creatures, a Chemical System is included, which steers the input to some of the neurons to determine environment parameters but als feedback (positive or negative) on previous actions.

Next screenshot is a rather simple approach to this, also the Chemicals need to decay over time depending on the 'organism' they apply to ('Organism A' could show a higher rate of decay than 'Organism B').

Project Structure

Those two simple views and the MVVM set up took me around three weeks and the project structure is (imho) MVVM compliant:

Basic Principles

So basically, there are some principles which apply to the code structure:

- implementation against interface

- Service Based architecture

- Object creation is done via a service, for example, time management by the other. and so on

- Dependency Injection (only c'tor injection)

- to clearly see the dependencies at a single point

- TDD (Test-Driven Development)

- first write tests, then use this as an example for implementation

- readability over performance (at least in the beginning ;-))

- line-of-code saving is for much more intelligent software engineers (see https://en.wikipedia.org/wiki/Dunning%E2%80%93Kruger_effect)

- cluster to small libraries

- did not yet apply this to the WPF project (one would need to create a project for models and viewmodels and views, I guess)

No responses yet